Mit Nomagic bringen wir den Robotern bei, die reale Welt zu verstehen. Es ist kein Geheimnis, dass die reale Welt unglaublich komplex ist und man sich für manche Aufgaben vielleicht ein zusätzliches Augenpaar wünscht. Einige unserer Roboter empfanden das genauso, also beschlossen wir, ihnen zu helfen.

In diesem Beitrag teilen wir unsere Erfahrungen beim Aufbau eines Systems, mit dem Sie mithilfe des Robot Operating System (ROS1) bei minimaler CPU-Auslastung schnell Farb- und Tiefenfotos von bis zu 16 Intel RealSense-Kameras aufnehmen können. Quellcode enthalten!

Auf den ersten Blick könnte man meinen, man könnte einfach ein paar USB-3-Hubs kaufen, alle Kameras anschließen und fertig. Eine ausreichende Anzahl von USB-3-Anschlüssen zu erhalten, ist jedoch nur eine oberflächliche Herausforderung.

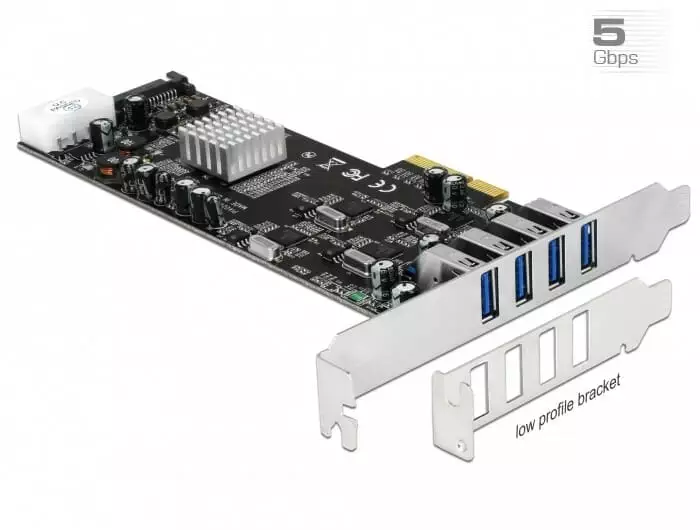

Die eigentliche Herausforderung liegt in der begrenzten USB-3-Bandbreite. Intel hat einen großartigen Artikel, in dem die verschiedenen Details zum Anschluss mehrerer Kameras erläutert werden. Die wichtigste Erkenntnis ist, dass Sie versuchen sollten, die Anzahl und Qualität der USB-3-Controller, die die Kameras steuern, zu maximieren. Da unsere PCs nur über einen einzigen USB-Controller verfügen, haben wir uns für den Kauf dieser PCIe-USB-Karte entschieden, die für jeden Port einen separaten USB-Controller bietet: https://www.delock.com/produkte/2072_Type-A/89365/merkmale.html.

Um die gewünschte Anzahl an Anschlüssen (16) zu erreichen und sicherzustellen, dass jede Kamera ausreichend mit Strom versorgt wird, haben wir außerdem vier zusätzliche externe USB-Hubs mit eigener Stromversorgung gekauft: https://www.delock.de/produkte/G_64053/merkmale.html.

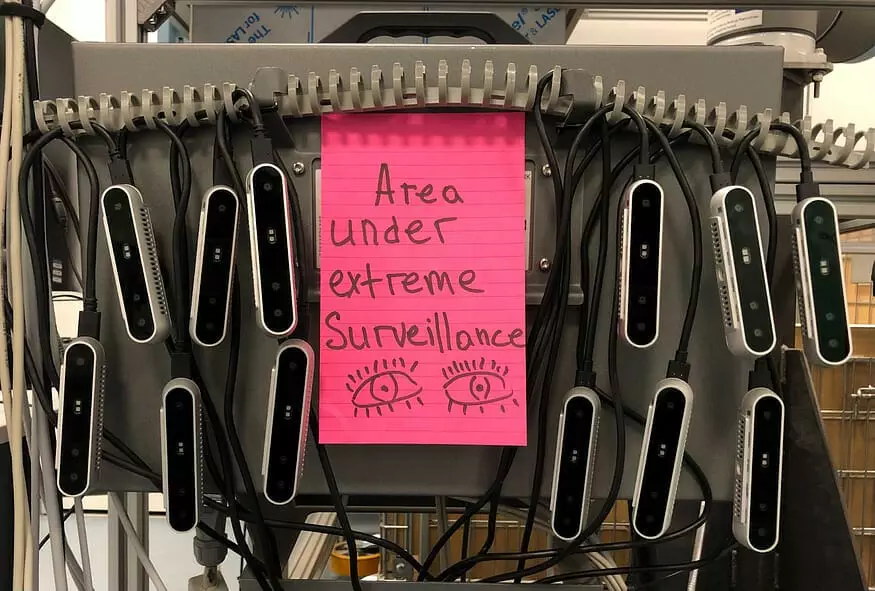

Das Anschließen aller Kameras hat großen Spaß gemacht:

Vor vielen Robotern kann man sich verstecken, aber vor diesem nicht 🙂

Meistens benötigt ein Roboter nur eine einzige Kamera, und selbst wenn mehr verwendet werden, sind es normalerweise 2–4 Kameras. 16 Kameras an einen einzigen PC anzuschließen klingt definitiv ehrgeizig. Also dachten wir uns: Lasst es uns tun!

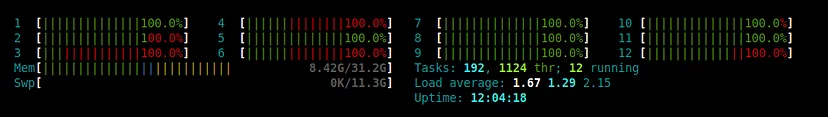

Wir haben die Kameras nacheinander aktiviert und das Tiefenvideo mithilfe von Rosé-RealSense und RViz auf einem Bildschirm angezeigt. Nach einer Weile bemerkten wir, dass die Bilder pro Sekunde (FPS) zu sinken begannen. Eine schnelle Untersuchung ergab die Grundursache:

Eines der Zeichen, dass die Kameras funktionieren 🙂

Linux-Nutzer kennen den Screenshot oben wahrscheinlich – volle CPU-Auslastung, was unerwünscht ist, insbesondere wenn man andere Programme auf dem Rechner ausführen möchte. Auf unserem PC beanspruchte eine einzelne Kamera etwa 1,2 CPU-Kerne. Der nächste Schritt bestand darin, die Quelle der CPU-Auslastung zu ermitteln.

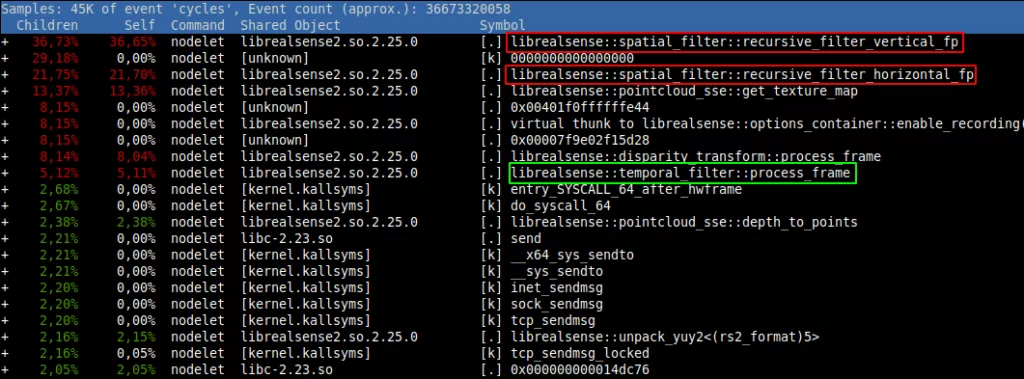

Wir führten eine Profilerstellung durch und fanden heraus, dass der Großteil der CPU-Last durch die Nachbearbeitung in Rosé-RealSense verursacht wird. Jedes von der Kamera empfangene Bild wird gefiltert, um Rauschen zu entfernen und fehlende Daten durch räumliche und/oder zeitliche Interpolation zu ergänzen. Das Filtern eines einzelnen Bildes dauert einige Millisekunden, sodass etwa 10 Kameras ausreichen, um selbst eine Hochleistungs-CPU zu überlasten. Während das Filtern durch Rosé-RealSense optional ist, verbessert es die Qualität der Tiefenbilder erheblich; deshalb wollten wir es beibehalten.

Wir haben festgestellt, dass wir eigentlich nicht alle Frames filtern müssen, da wir letztendlich nur alle paar Sekunden einen Frame verwenden. Um dieses Problem zu beheben, haben wir Rosé-RealSense geforkt und angepasst, um diesen Anwendungsfall zu berücksichtigen. Das Speichern und Filtern mehrerer Bilder (anstatt nur eines) war notwendig, da einige Filterprozesse auf früheren Bildern basieren. Anstatt jedes Bild zu filtern, haben wir die aktuellsten Bilder in einem Ringpuffer gespeichert und die Filterung nur durchgeführt, wenn sie über einen ROS-Serviceaufruf angefordert wurde.

Diese Optimierung reduzierte die CPU-Auslastung erheblich auf etwa 201 TP3T pro Kamera. Der Kampf war jedoch noch nicht vorbei. Die niedrigen Bildraten blieben bestehen, obwohl sich das Muster änderte – anstatt alle Kameras zu betreffen, wurde das Problem lokaler, was dazu führte, dass einzelne Kameras zeitweise ihre Leistung reduzierten. Der (vorhersehbare) Schuldige wurde in den Rosé-RealSense-Protokollen identifiziert, die mit Nachrichten wie dieser gefüllt waren:

13/05 10:16:06,190 WARNUNG [140247325210368] (backend-v4l2.cpp:1057) Unvollständiges Bild empfangen: Unvollständiges Videobild erkannt! Größe 541696 von 1843455 Bytes (29%)

Obwohl wir unterhalb des theoretischen USB-Durchsatzes lagen, hatten die Kameras Probleme, vollständige Bilder zu übertragen. Wir haben die Bildrate auf 6 Bilder pro Sekunde gesenkt und Infrarot-Streams deaktiviert. Glücklicherweise reichte dies aus, um Stabilität zu erreichen.

Nachdem die Schlacht gewonnen war, testeten wir unsere Lösung, um die Latenzen einzuschätzen, die wir erreichen konnten.

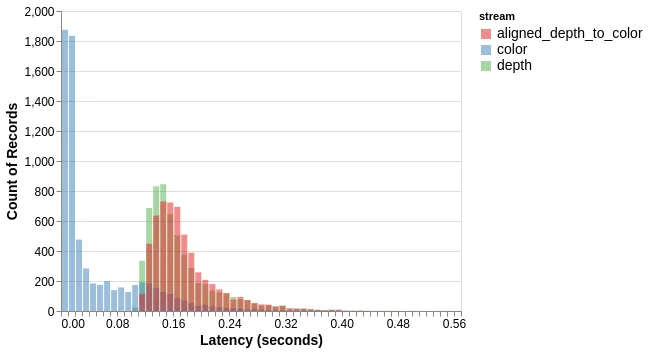

Farbige Frames (blau) erfordern keine Verarbeitung und können daher schnell empfangen werden – normalerweise in weniger als 10 Millisekunden. Die Anforderung eines Tiefenframes (grün) löst eine ausstehende Filterung aus, die je nach Thread-Planung etwa 150 ms dauert. Ausgerichtete Tiefenframes (rot) erfordern etwas mehr Verarbeitung, daher die leichte Erhöhung der Latenz.

Der Betrieb der Kameras mit niedriger Bildfrequenz und längeren Verarbeitungszeiten bedeutet, dass die von unserem Service bereitgestellten Bilder leicht veraltet sind. Wenn Ihre Szene jedoch wie unsere größtenteils statisch ist, ist eine Verzögerung von 0,5 Sekunden akzeptabel.

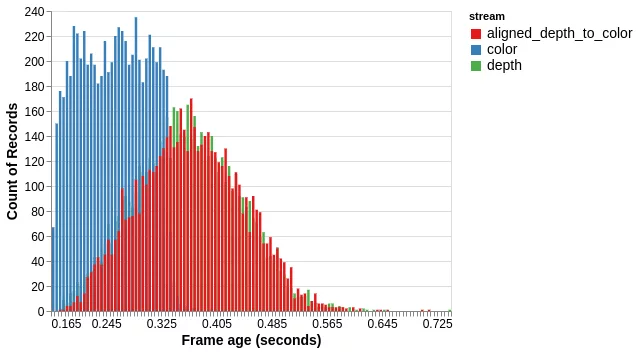

Das obige Histogramm zeigt das Alter der empfangenen Frames, gemessen mithilfe von Zeitstempeln, die zu Beginn der Belichtung erstellt wurden (daher entspricht das Mindestalter dem Intervall zwischen den Frames – 166 Millisekunden).

Zusammenfassend lässt sich sagen, dass unsere Modifikation die CPU-Auslastung von Intel RealSense-Kameras erheblich reduziert, wenn Sie nur gelegentlich ein einzelnes Bild abrufen müssen. Mit dieser Modifikation und etwas zusätzlicher Hardware ist es möglich, über ein Dutzend Kameras anzuschließen und zu nutzen.

Den Quellcode für diese Modifikation finden Sie hier: https://github.com/NoMagicAi/realsense-ros.

Piotr Rybicki

Setzen Sie sich mit Nomagic in Verbindung und erfahren Sie, wie unsere innovative Technologie Ihre Fulfillment-Prozesse auf die nächste Stufe heben kann.