Piotr Rybicki

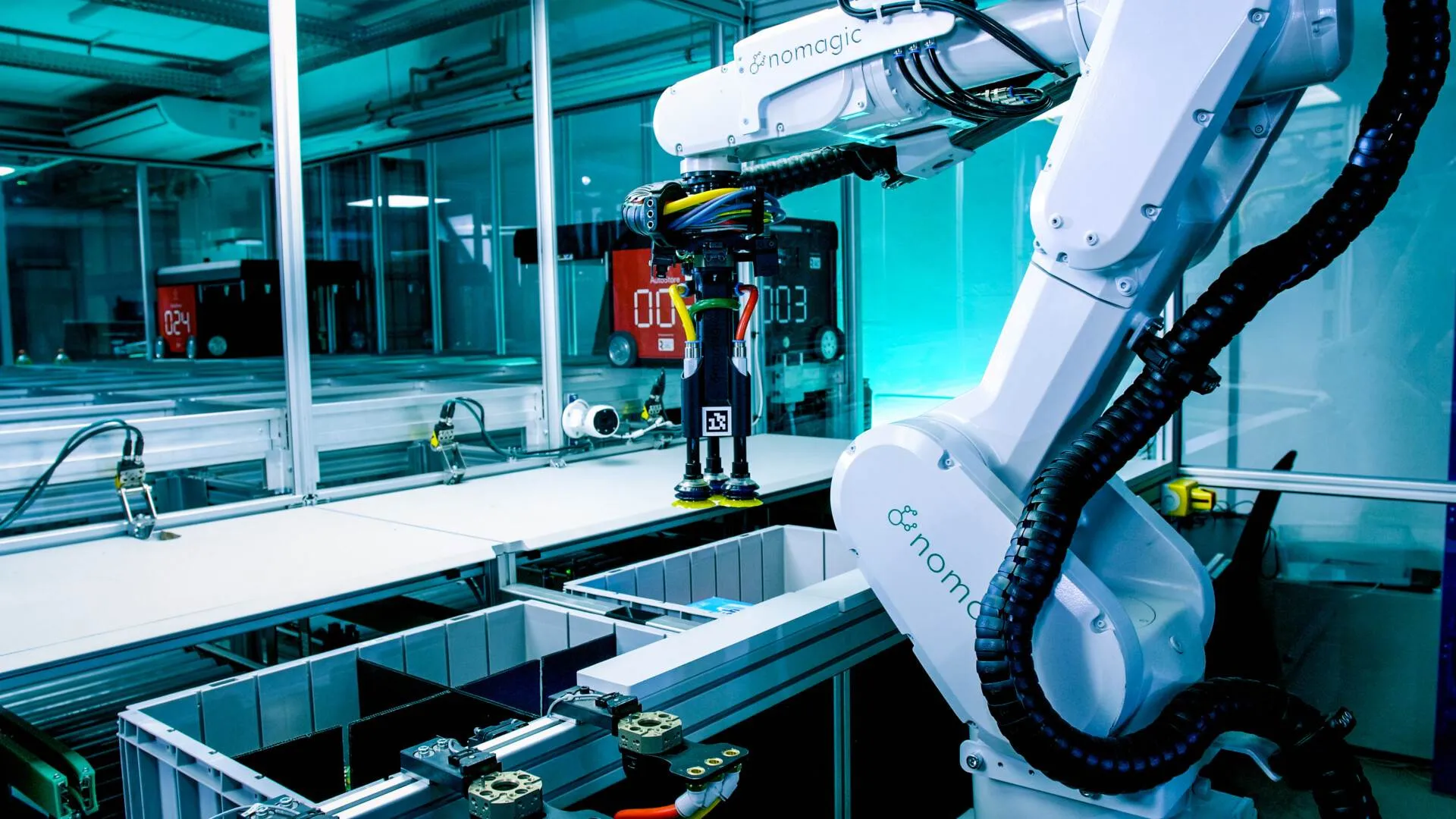

At Nomagic, we teach robots to understand the real world. It’s no secret that the real world is incredibly complex, and for some tasks, you might wish you had an extra pair of eyes. Some of our robots felt the same way, so we decided to help them out.

In this post, we share our experience in building a system that enables you to quickly capture colour and depth photos from up to 16 Intel RealSense cameras with minimal CPU usage, using the Robot Operating System (ROS1). Source code included!

At first glance, you might think you could simply purchase a few USB 3 hubs, connect all the cameras, and be done with it. However, obtaining a sufficient number of USB 3 ports is only a surface-level challenge.

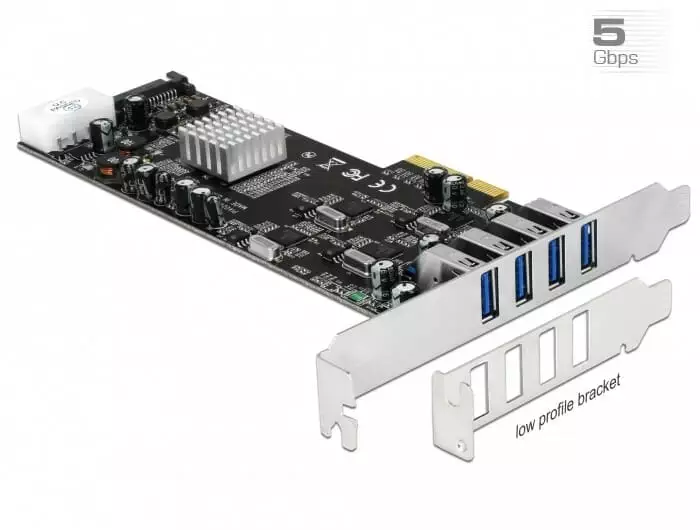

The real challenge lies in the limited USB 3 bandwidth. Intel has a great article explaining the various details related to connecting multiple cameras. The main takeaway is that you should aim to maximise the number and quality of USB 3 controllers managing the cameras. Since our PCs have only a single USB controller, we decided to purchase this PCIe USB card, which has a separate USB controller for each port: https://www.delock.com/produkte/2072_Type-A/89365/merkmale.html.

To achieve the desired number of ports (16) and ensure that each camera has sufficient power, we also purchased four additional powered external USB hubs: https://www.delock.de/produkte/G_64053/merkmale.html.

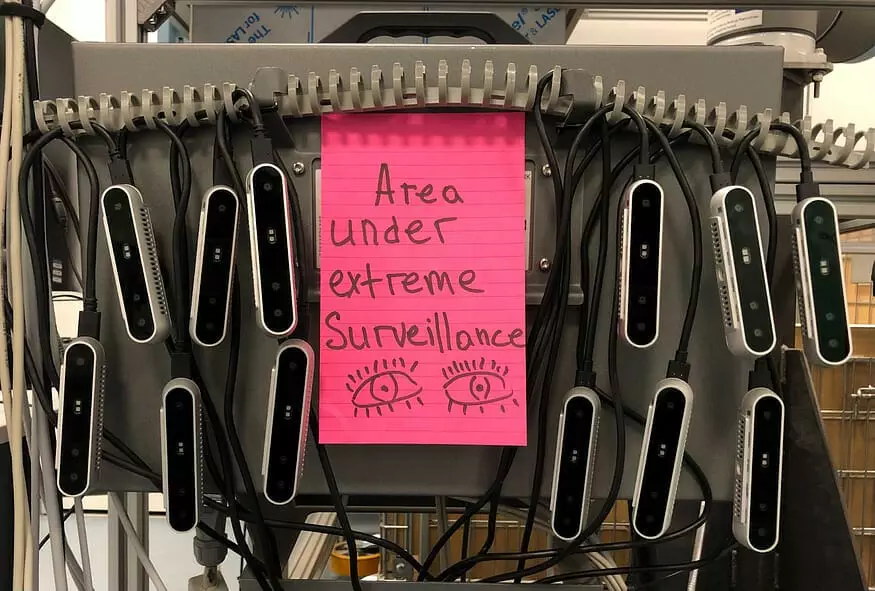

Connecting all the cameras was great fun:

You can hide from many robots, but not this one 🙂

Most of the time, a robot needs only a single camera, and even when more are used, it’s typically between 2–4 cameras. Connecting 16 cameras to a single PC definitely sounds ambitious. So, we thought, let’s do it!

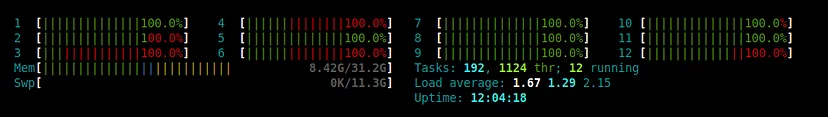

We attached and activated the cameras one by one, observing the depth video on a screen using ros-realsense and RViz. After a while, we noticed that the frames per second (FPS) began to drop. A quick investigation revealed the root cause:

One of the signs that the cameras are working 🙂

The screenshot above will likely be familiar to Linux users — full CPU utilisation, which is undesirable, especially if you intend to run other programs on the machine. On our PC, a single camera consumed approximately 1.2 CPU cores. The next step was to determine the source of the CPU load.

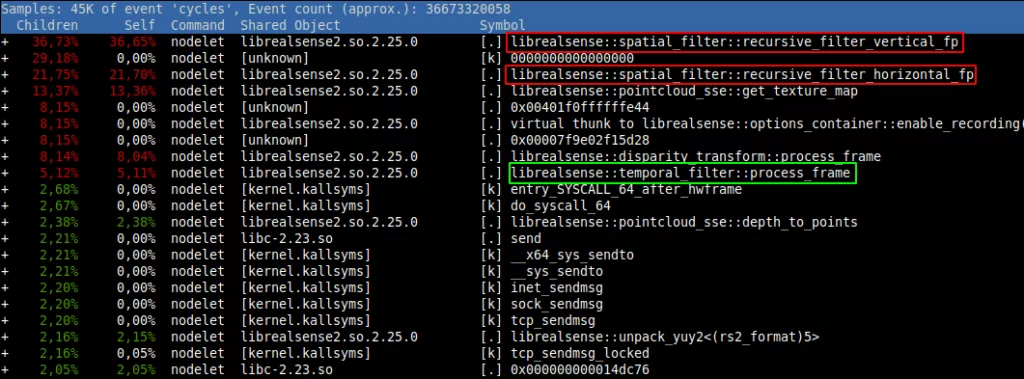

We performed a profiling exercise and found that most of the CPU load was caused by post-processing in ros-realsense. Each frame arriving from the camera undergoes filtering to remove noise and fill in missing data using spatial and/or temporal interpolation. Filtering a single frame takes a few milliseconds, so around 10 cameras are enough to saturate even a high-performance CPU. While filtering in ros-realsense is optional, it significantly improves the quality of the depth images, so we wanted to retain it.

We observed that we didn’t actually need to filter all the frames, as we ultimately only use one frame every few seconds. To address this, we forked and modified ros-realsense to consider this use case. Instead of filtering every frame, we stored the most recent frames in a ring buffer and applied filters only when requested via a ROS Service call. Retaining and filtering several frames (rather than just one) was necessary because some filtering processes rely on past images.

This optimisation greatly reduced CPU usage to around 20% per camera. However, the battle was not yet over. Low frame rates persisted, though the pattern changed — instead of affecting all the cameras, the problem became more localised, causing arbitrary cameras to reduce their performance intermittently. The (predictable) culprit was identified in ros-realsense logs, which were filled with messages such as:

13/05 10:16:06,190 WARNING [140247325210368] (backend-v4l2.cpp:1057) Incomplete frame received: Incomplete video frame detected! Size 541696 out of 1843455 bytes (29%)

Even though we were below the theoretical USB throughput, the cameras struggled to transmit complete frames. We lowered the frame rate to 6 frames per second and disabled infrared streams. Thankfully, this was sufficient to achieve stability.

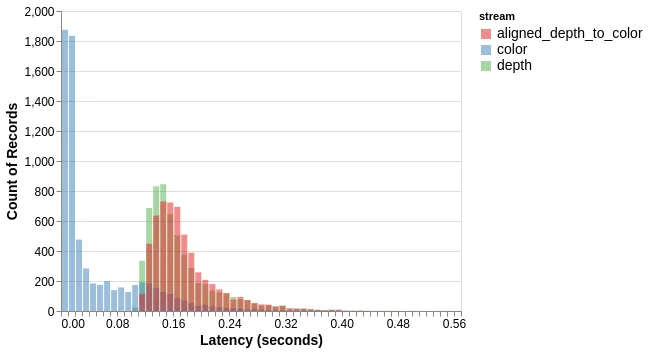

Once the battle was won, we tested our solution to assess the latencies we could achieve.

Colour frames (blue) require no processing, so they can be received quickly — usually under 10 milliseconds. Requesting a depth frame (green) triggers pending filtering, which takes around 150ms, depending on thread scheduling. Aligned depth frames (red) require slightly more processing, hence the slight increase in latency.

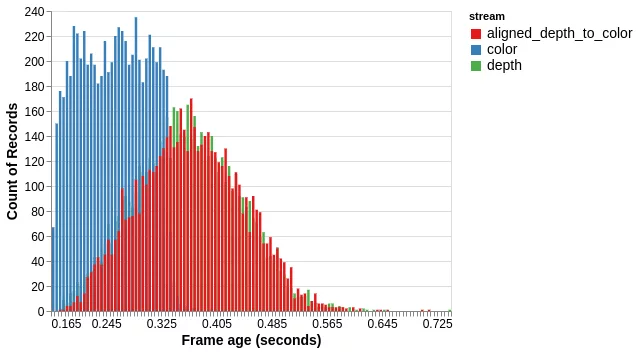

Operating the cameras at a low frame rate with longer processing times means the frames provided by our service are slightly aged. However, if your scene is mostly static, as ours is, a delay of 0.5 seconds is acceptable.

The histogram above shows the age of received frames, measured using timestamps created at the start of exposure (hence the minimum age is equal to the inter-frame interval — 166 milliseconds).

In summary, our modification significantly reduces the CPU usage caused by Intel RealSense cameras when you only need to retrieve a single frame occasionally. With this modification and some additional hardware, it is possible to connect and utilise over a dozen cameras.

The source code for this modification can be found here: https://github.com/NoMagicAi/realsense-ros.

Get in touch with Nomagic to see how our innovative technology can take your fulfillment processes to the next level.